Continual Learning of Range-Dependent Transmission Loss

Project Snapshot

- Project Title: Continual Learning of Range-Dependent Transmission Loss for Underwater Acoustics Using Conditional Convolutional Neural Networks

- Type: Research

- Project Report: View Article (PDF)

- Concepts:

- Range-Dependent Conditional Convolutional Neural Network (RC-CAN)

- Continual Learning and Catastrophic Forgetting

- Replay-Based Training Strategy

- Reduced Order Modeling (ROM)

- Duration: 2023-24

- Skills Developed:

- Data Generation: Bellhop

- Data Manipulation & Analysis: pandas, numpy, scipy

- Data Visualization: matplotlib, seaborn

- Feature Engineering: scikit-learn

- Model Deployment: PyTorch

- Model Evaluation: PyTorch

- Others: Compute Canada

Project Overview

1. Objective

To develop a data-driven model using a range-dependent conditional convolutional neural network (RC-CAN) for accurate prediction of underwater acoustic transmission loss over varying ocean bathymetry, incorporating a continual learning framework.

2. Key Contributions

- Novel RC-CAN Architecture: Introduced a convolutional neural network that conditions on range-dependent ocean bathymetry to predict acoustic transmission loss.

- Replay-Based Training Strategy: Implemented a continual learning approach to generalize the model across different bathymetry profiles, mitigating catastrophic forgetting.

- Application to Real-World Scenarios: Demonstrated the model's effectiveness on both idealized and real-world bathymetry data, including Dickin's seamount in the Northeast Pacific.

Introduction and Background

1. Challenges in Underwater Acoustic Prediction:

- Traditional numerical methods (e.g., Navier-Stokes equations) are computationally intensive and unsuitable for real-time far-field noise prediction.

- Existing deep learning models like Convolutional Recurrent Autoencoder Networks (CRAN) are limited by autoregressive prediction and lack far-field bathymetry information.

2. Proposed Solution:

- Develop an RC-CAN model that incorporates ocean bathymetry data directly into the neural network.

- Employ a continual learning framework to enable the model to adapt to varying bathymetry profiles without forgetting previously learned information.

Methodology

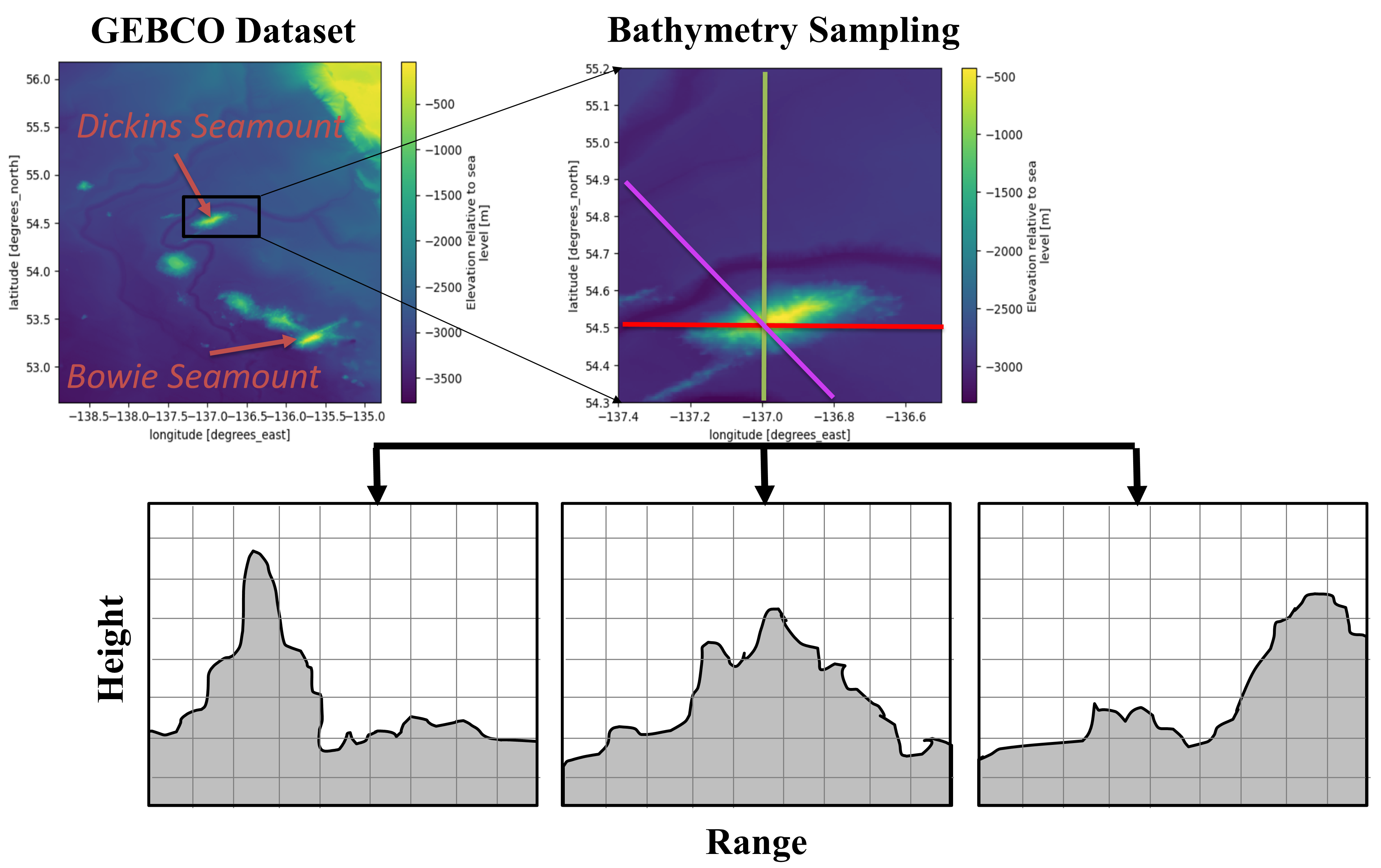

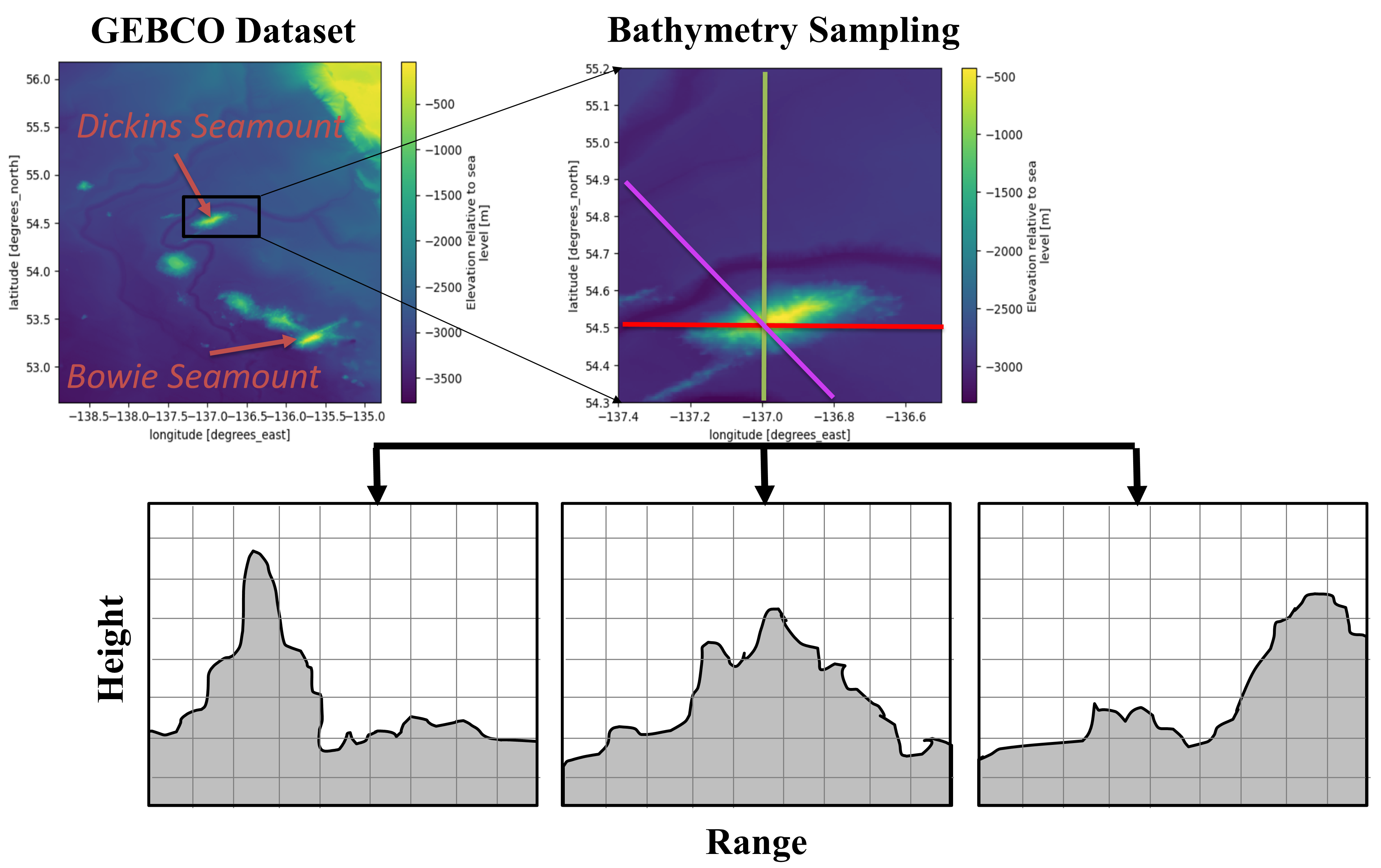

Figure 1: Schematics of sampling bathymetry profiles from GEBCO database.

1. Dataset Creation

-

Training Data Generation:

-

The RC-CAN model was trained using data generated by BELLHOP, a ray/beam tracing solver, to simulate transmission loss distributions across varying bathymetry conditions.

-

Sequential Training Process:

-

Step 1: Started with training on an ideal seamount dataset to establish a foundational understanding.

-

Step 2: Continued training with wedge profile datasets to introduce varying sea floor gradients.

-

Step 3: Concluded with training on the Dickins Seamount dataset to adapt the model to realistic bathymetry profiles.

-

Model Adaptation:

-

The sequential training enabled the RC-CAN model, initially trained on idealistic bathymetries, to accurately predict transmission loss for the complex and realistic Dickins Seamount profile.

-

Dickins Seamount Dataset:

-

Located in the Northeast Pacific Ocean, the Dickins Seamount provided a real-world bathymetry profile for model evaluation.

-

Ocean bathymetry data around the Dickins Seamount was sampled to create realistic datasets for validating the model's performance.

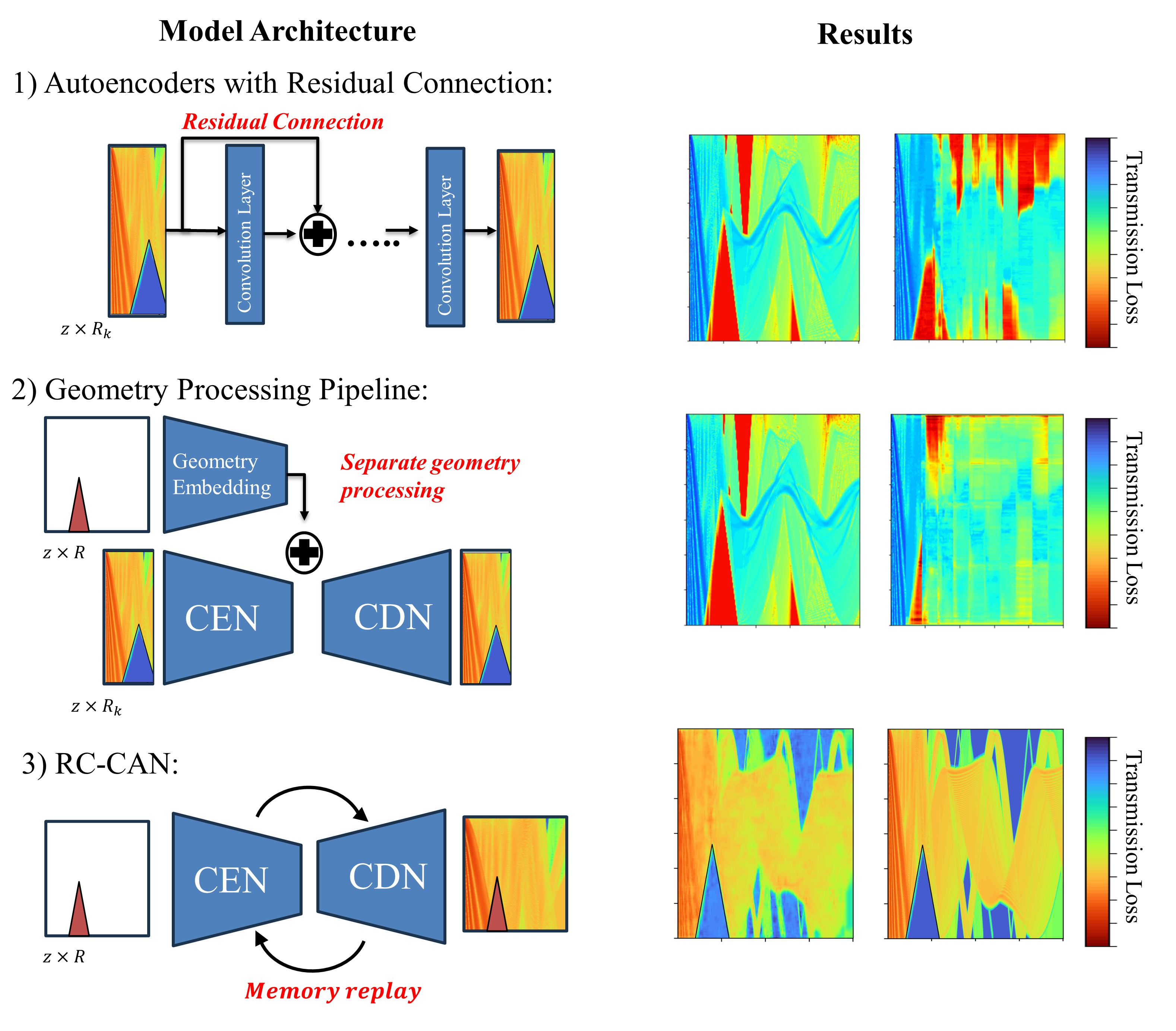

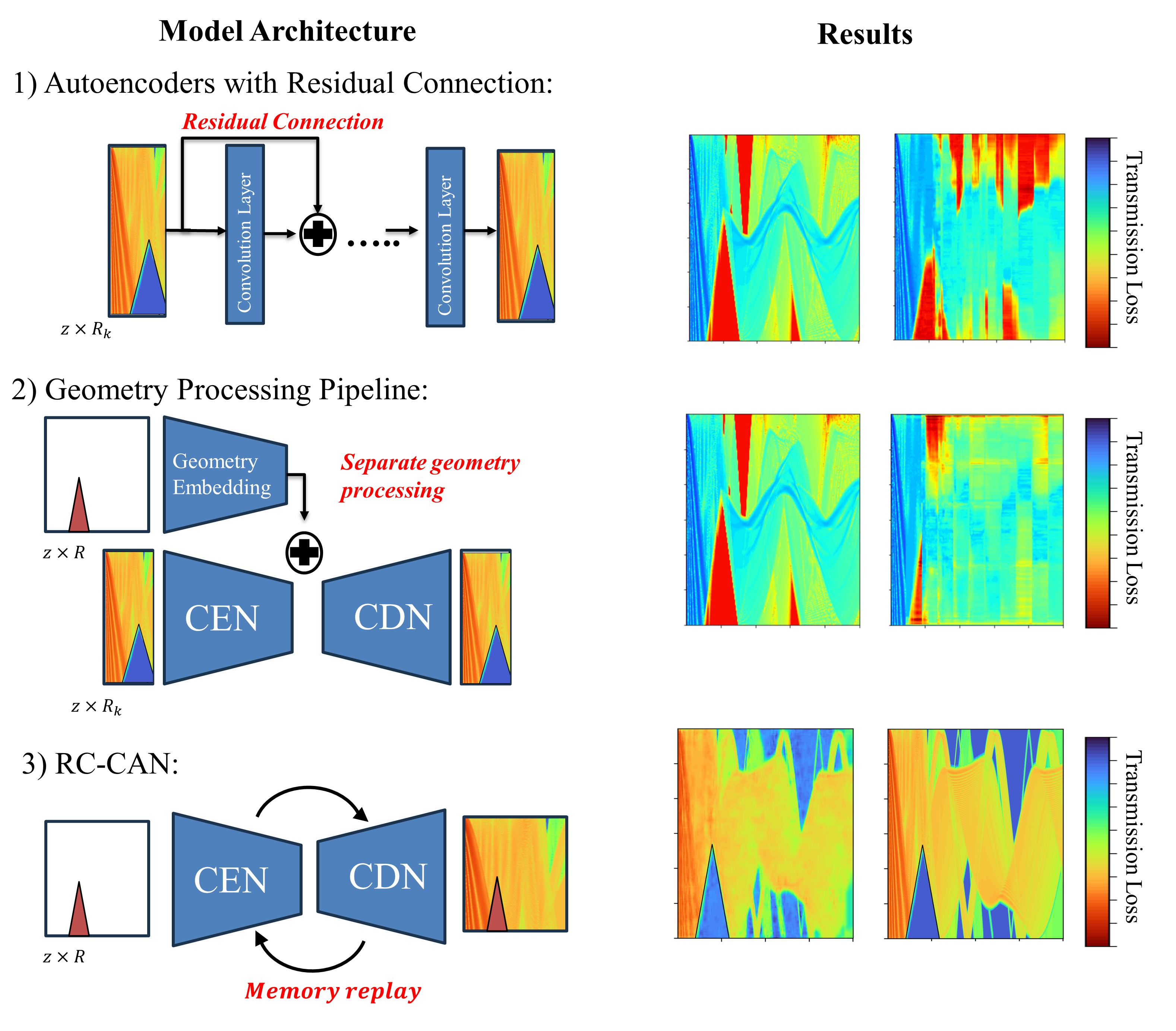

Figure 2: Comparison of three different ML architectures and results.

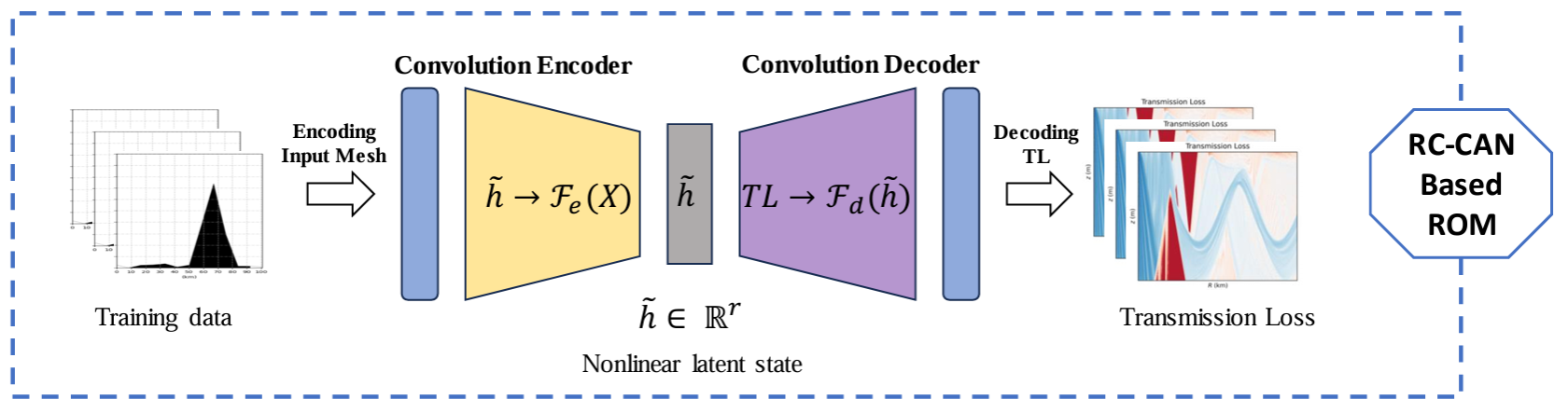

2. RC-CAN Architecture

Architecture Overview:

-

Network Structure: The RC-CAN model is a 2D convolutional network consisting of two main components:

- Encoder Path (\(\Psi_E\)): Encodes the input ocean bathymetry mesh into a low-dimensional latent space.

- Decoder Path (\(\Psi_D\)): Decodes the latent representation to predict the transmission loss on the input mesh.

-

Encoder Path Details:

- Comprises four padded convolutional layers.

- Each layer is followed by batch normalization and Leaky ReLU activation.

- A max pooling operation is applied after each convolution to downsample the data.

-

Decoder Path Details:

- Involves up-sampling the feature maps before each transpose convolution.

- Consists of four transpose convolutional layers (up-convolutions).

- Each layer is followed by batch normalization and Leaky ReLU activation.

- The final layer uses a 2D convolution kernel with one feature channel to align with the input data.

-

Total Convolutional Layers: The network comprises a total of 8 convolutional layers.

3. Replay-Based Training Strategy

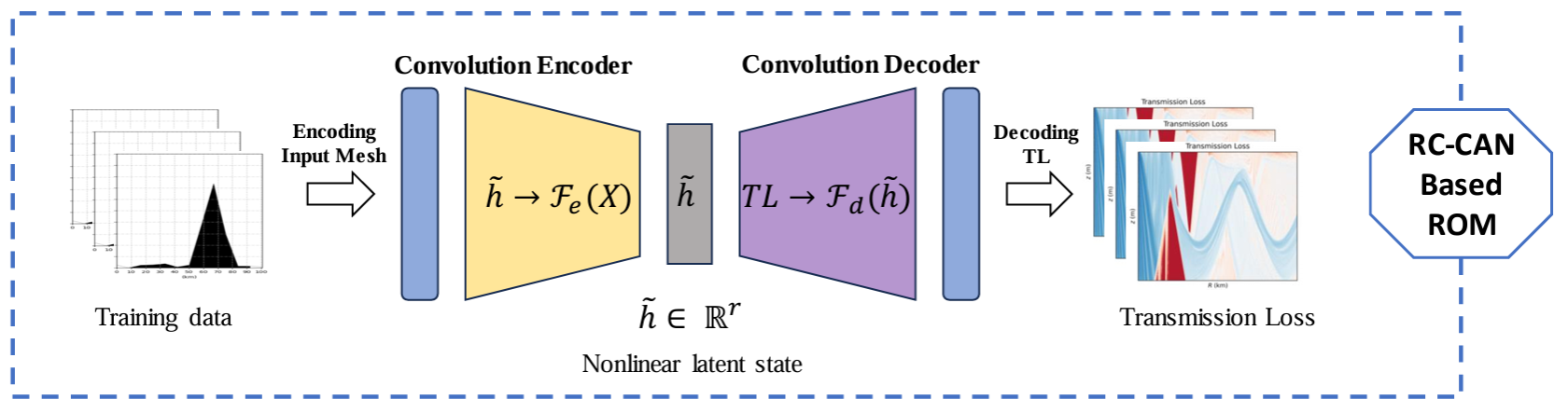

Figure 3: An illustration of the proposed range conditional convolutional network.

Figure 1: Schematics of sampling bathymetry profiles from GEBCO database.

1. Dataset Creation

-

Training Data Generation:

- The RC-CAN model was trained using data generated by BELLHOP, a ray/beam tracing solver, to simulate transmission loss distributions across varying bathymetry conditions.

-

Sequential Training Process:

- Step 1: Started with training on an ideal seamount dataset to establish a foundational understanding.

- Step 2: Continued training with wedge profile datasets to introduce varying sea floor gradients.

- Step 3: Concluded with training on the Dickins Seamount dataset to adapt the model to realistic bathymetry profiles.

-

Model Adaptation:

- The sequential training enabled the RC-CAN model, initially trained on idealistic bathymetries, to accurately predict transmission loss for the complex and realistic Dickins Seamount profile.

-

Dickins Seamount Dataset:

- Located in the Northeast Pacific Ocean, the Dickins Seamount provided a real-world bathymetry profile for model evaluation.

- Ocean bathymetry data around the Dickins Seamount was sampled to create realistic datasets for validating the model's performance.

Figure 2: Comparison of three different ML architectures and results.

2. RC-CAN Architecture

Architecture Overview:

-

Network Structure: The RC-CAN model is a 2D convolutional network consisting of two main components:

- Encoder Path (\(\Psi_E\)): Encodes the input ocean bathymetry mesh into a low-dimensional latent space.

- Decoder Path (\(\Psi_D\)): Decodes the latent representation to predict the transmission loss on the input mesh.

-

Encoder Path Details:

- Comprises four padded convolutional layers.

- Each layer is followed by batch normalization and Leaky ReLU activation.

- A max pooling operation is applied after each convolution to downsample the data.

-

Decoder Path Details:

- Involves up-sampling the feature maps before each transpose convolution.

- Consists of four transpose convolutional layers (up-convolutions).

- Each layer is followed by batch normalization and Leaky ReLU activation.

- The final layer uses a 2D convolution kernel with one feature channel to align with the input data.

- Total Convolutional Layers: The network comprises a total of 8 convolutional layers.

Figure 3: An illustration of the proposed range conditional convolutional network.

Continual Learning Approach:

- Challenge: Models tend to forget previously learned information when trained sequentially on new data (catastrophic forgetting).

- Solution: Implement a replay-based training method that stores a subset of past data and retrains the model periodically.

- Benefits: Enables the model to adapt to new bathymetry profiles while retaining knowledge from earlier training.

Training Procedure:

- Optimizer: AdamW optimizer for efficient gradient descent.

- Learning Rate Scheduler: Cosine annealing warm restarts to adjust the learning rate periodically.

- Loss Function: Minimize the reconstruction error using the L2 norm.

- Evaluation Metric: Structural Similarity Index Measure (SSIM) to compare predicted and ground truth data.

Results and Analysis

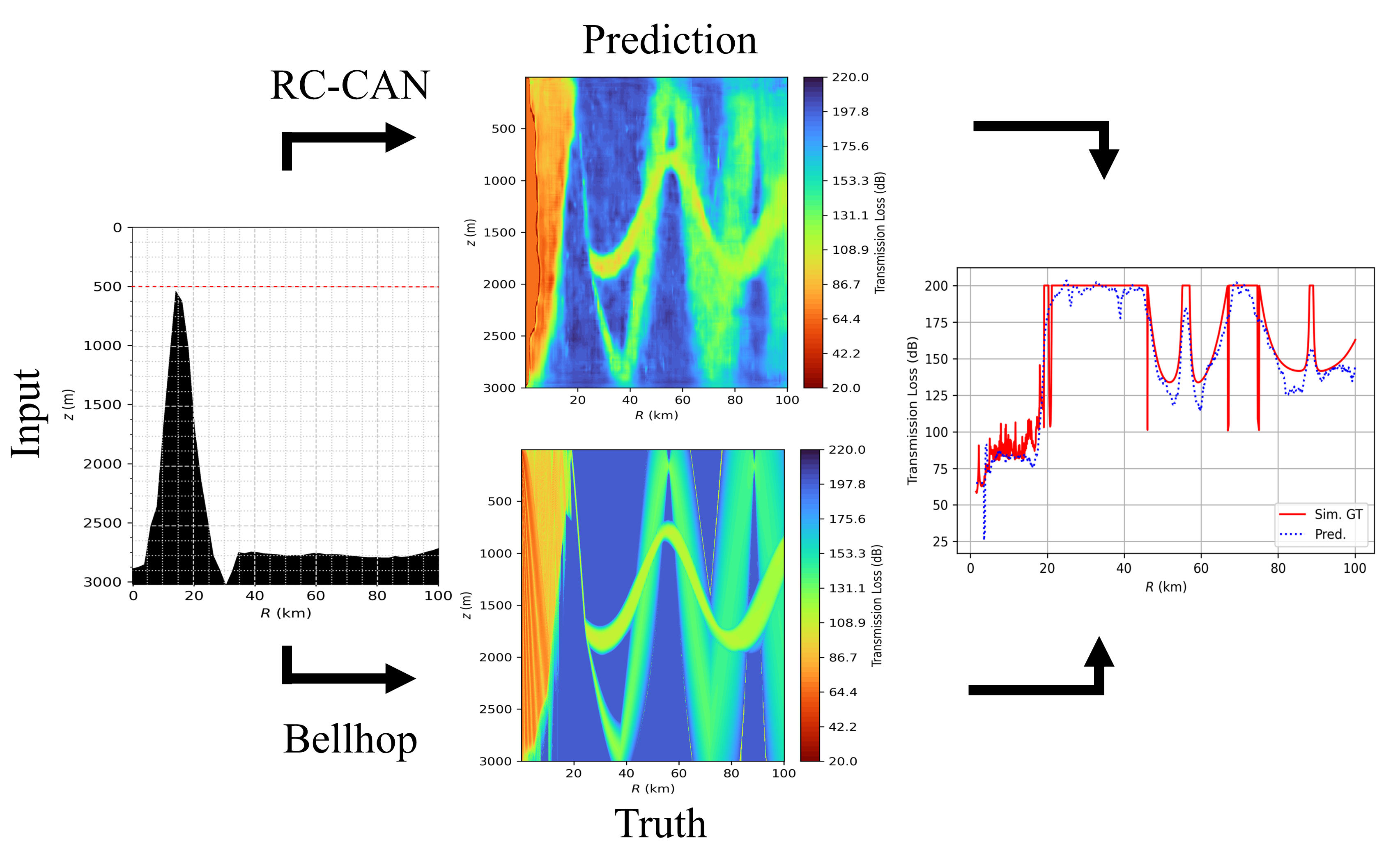

Model Performance:

- Accuracy: Achieved up to 90% SSIM accuracy in predicting transmission loss over Dickin's seamount.

- Generalization: Successfully predicted transmission loss for bathymetry profiles not seen during training.

- Single-Shot Prediction: Eliminated the need for autoregressive methods, improving efficiency and accuracy.

Figure 4: Transmission loss prediction for Dickins sea mount.

Comparison with Existing Models:

- Outperformed CRAN-type architectures in far-field predictions.

- Demonstrated the importance of incorporating bathymetry information into the model input.

Implications:

- Real-Time Prediction: Potential for integration into adaptive management systems for underwater noise mitigation.

- Environmental Impact: Assists in reducing the impact of underwater noise on marine mammals by providing accurate noise mapping.

Conclusions

Summary of Findings:

- The RC-CAN model effectively captures the complex relationship between ocean bathymetry and acoustic transmission loss.

- The replay-based continual learning strategy enhances the model's ability to generalize across diverse bathymetry profiles.

- The single-shot prediction approach addresses limitations of previous autoregressive models.

Future Work:

- Integration of higher-fidelity solvers or experimental data to further improve model accuracy.

- Extension of the continual learning framework to accommodate more complex and varying environmental parameters.

- Deployment in real-world scenarios for real-time underwater noise monitoring and mitigation.

Final Remarks

This project demonstrates the successful application of advanced deep learning techniques to a complex physical problem in underwater acoustics. By integrating ocean bathymetry data directly into the neural network and employing a continual learning framework, the RC-CAN model achieves high accuracy in predicting transmission loss across varying environments. The approach addresses key limitations of existing methods and offers significant potential for real-time environmental monitoring and mitigation strategies in marine operations.